In an era of rapidly evolving cybersecurity threats, traditional methods of assessing vulnerabilities are struggling to keep pace with the complexity and sophistication of modern attack vectors. Red Teaming and penetration testing, cornerstone practices in organizational defense strategies, often fall short in emulating the nuanced tactics of advanced adversaries. Enter Generative Artificial Intelligence (GAI) and Large Language Models (LLMs) — technologies like ShellGPT — poised to revolutionize these practices with enhanced precision, adaptability, and scalability.

The Challenges of Traditional Red Teaming

Red Teaming exercises are designed to simulate real-world attacks, exposing organizational vulnerabilities by mimicking the strategies of cybercriminals. However, traditional approaches often lack:

- Adaptability: Static methodologies fail to reflect the dynamic and unpredictable nature of cyber threats.

- Efficiency: Human-led simulations are resource-intensive and may overlook evolving attack patterns.

- Sophistication: Simulating advanced, multi-layered adversarial techniques remains a challenge, creating gaps in organizational preparedness.

These limitations underscore the need for innovative solutions to bolster cybersecurity practices.

The Role of Generative AI and LLMs in Cybersecurity

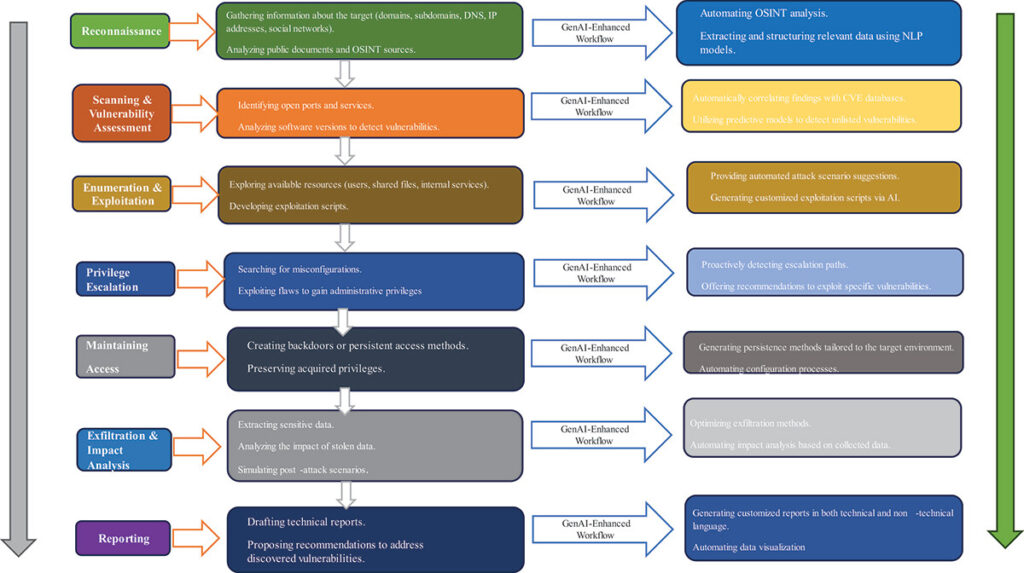

The integration of GAI and LLMs, such as ShellGPT, offers a transformative approach to Red Teaming and penetration testing. By leveraging the advanced capabilities of LLMs, organizations can enhance every stage of the cybersecurity assessment process, including:

- Dynamic Reconnaissance: LLMs provide real-time, context-aware suggestions for gathering intelligence on target systems and networks.

- Advanced Exploitation: AI-driven frameworks simulate sophisticated attack techniques, enabling teams to test defenses against evolving threats effectively.

- Enhanced Reporting: Automating the generation of comprehensive pentest reports improves accuracy and reduces the time spent on documentation.

Testing the Framework: A Real-World Approach

In this research, a novel AI-driven framework was developed and tested on a virtualized network designed to mimic real-world conditions. The results were striking:

- Increased Adaptability: LLMs adapted seamlessly to different scenarios, providing tailored suggestions for various stages of the testing process.

- Improved Precision: The simulations were more accurate in mimicking advanced adversarial techniques, providing a deeper understanding of vulnerabilities.

- Scalability: The framework addressed challenges related to scaling operations, making it applicable to organizations of varying sizes.

The Future of Cybersecurity

The integration of GAI and LLMs into Red Teaming exercises and penetration testing marks a paradigm shift in cybersecurity. By modernizing these practices, organizations can stay ahead of adversaries, anticipate emerging threats, and strengthen their defenses.

Key Takeaways

- Efficiency and Accuracy: LLMs streamline the simulation process while improving the precision of attack emulation.

- Advanced Threat Emulation: The ability to mimic complex adversarial techniques equips teams with tools to anticipate and mitigate sophisticated threats.

- Scalable Solutions: AI-driven frameworks can adapt to diverse organizational needs, making them accessible to a wide range of industries.

Conclusion

As cybersecurity threats grow more complex, the need for innovative and adaptive defense strategies becomes paramount. The transformative potential of Generative AI and Large Language Models offers a glimpse into the future of cybersecurity — one where technology empowers organizations to safeguard their systems with unparalleled efficiency and precision. By embracing these advancements, we can build a more secure digital landscape, prepared to meet the challenges of an ever-evolving threat environment.

Read full article on: https://www.tandfonline.com/doi/full/10.1080/07366981.2024.2439628